Sep

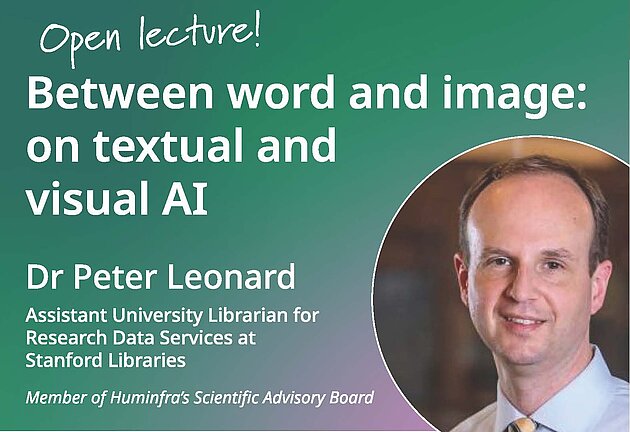

Between word and image: on textual and visual AI

Open lecture coordinated by the national infrastructure HUMINFRA on textual and visual AI.

Dr Peter Leonard

Assistant University Librarian for Research Data Services at Stanford Libraries

Member of Huminfra’s Scientific Advisory Board

Multimodal models, or Visual LLMs, are increasingly common in consumer and commercial contexts. These neural networks combine textual with visual understanding, offering some interesting possibilities for cultural heritage workers and scholars alike. This talk examines a few case studies, drawn from both Nordic and American contexts, that suggest possible areas of inquiry. From generating new Danish Golden Age paintings, to unifying archival metadata with transformers-based image description for accessibility, to finding latent patterns in a collection of 40,000 photographs of Lund, we will explore the unexpected possibilities of this emerging intersection between text and image.

22 September 2025, 15:00-16.30

LUX:C121

About the event:

Location: LUX:C121

Contact: anna.bladerhumlab.luse